Title: TIA-Net: A Multi-Modal Land Use Recognition Method Based on the Ternary Interactive Attention Mechanism

Abstract

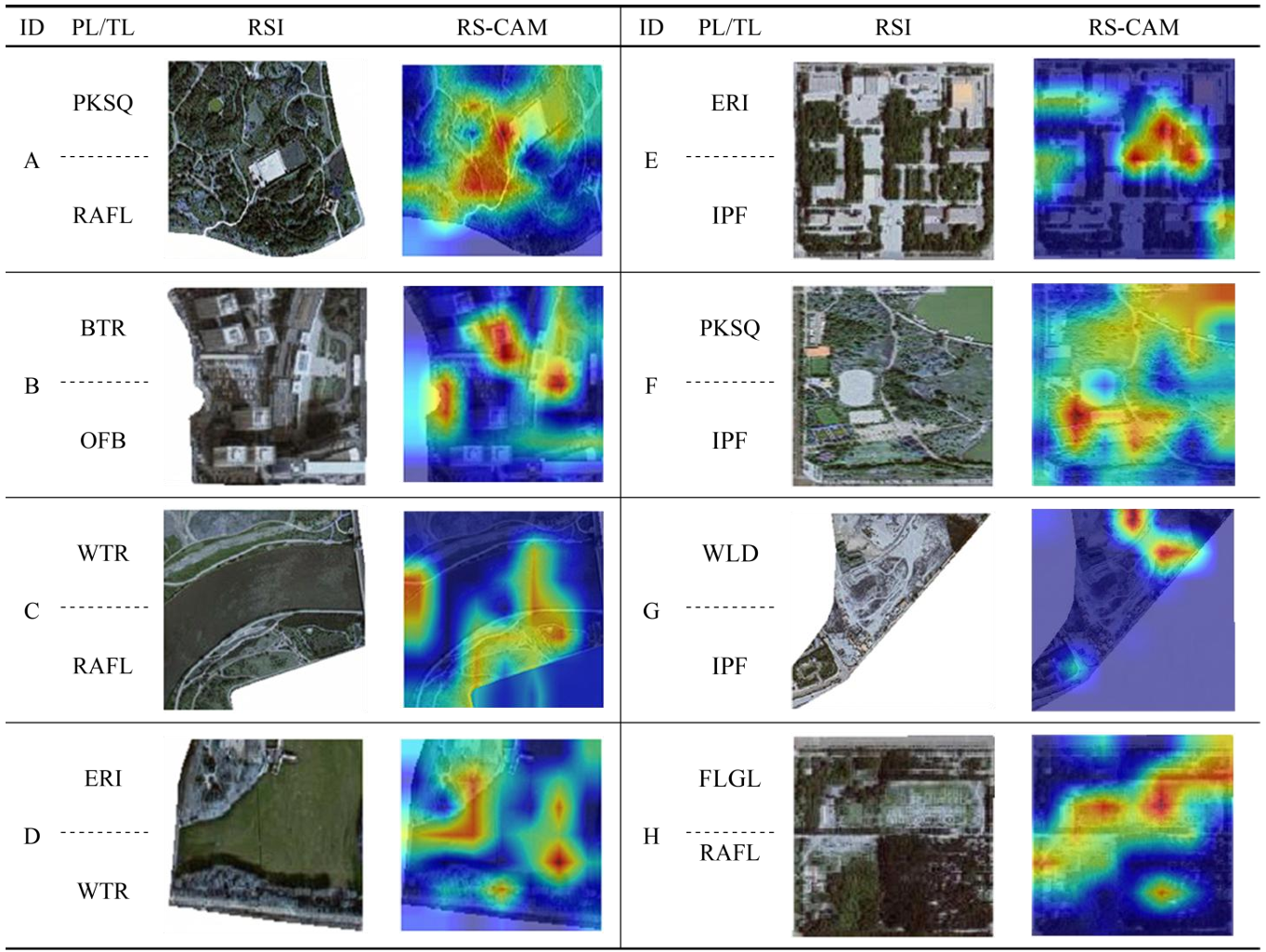

With the increasing demand for refined urban management, methods that rely on a single data source or coarse-grained land use classification are no longer sufficient. Therefore, this paper proposes a parcel-level fine-grained land use recognition model, called the Triple Interaction Attention Network (TIA-Net). TIA-Net integrates remote sensing imagery (RSI), semantic information of points of interest (POI), and temporal population density (TPD). Swin-BiFPN is used to extract multi-scale spatial features from RSI. HydraMultiRocketPlus is used to model the temporal dynamics of population mobility. The POI encoder is used to characterize the distribution of human activities. Based on these components, the Feature-preserving Triple Interaction Self-Attention (FP-TISA) module is proposed. FP-TISA achieves deep fusion across spatial, semantic, and temporal dimensions. The module can effectively capture nonlinear interactions between heterogeneous data. The module can also reduce feature loss, which is common in traditional methods. On the national land use dataset CN-MSLU-100K, TIA-Net achieves a test accuracy of 77.64%, a Kappa coefficient of 0.740, and a macro-average precision of 65.20%, all outperforming the existing baseline models. Especially for macro-average accuracy, TIA-Net achieves nearly double the performance of the baseline model. Further analysis based on Grad-CAM++ and attention visualization reveals the model’s focus on key areas and its cross-modal interaction mechanism. In summary, TIA-Net improves both land use classification accuracy and interpretability. The model provides strong technical support for territorial spatial planning and natural resource management.

Keywords

Attention Mechanism;

Deep Learning;

Interpretability;

Land Use Classification;

Multi-modal Data Fusion

Full Text Download

IEEE Transactions on Geoscience and Remote Sensing

Q.E.D.