Title: A multimodal data fusion model for accurate and interpretable urban land use mapping with uncertainty analysis

Abstract

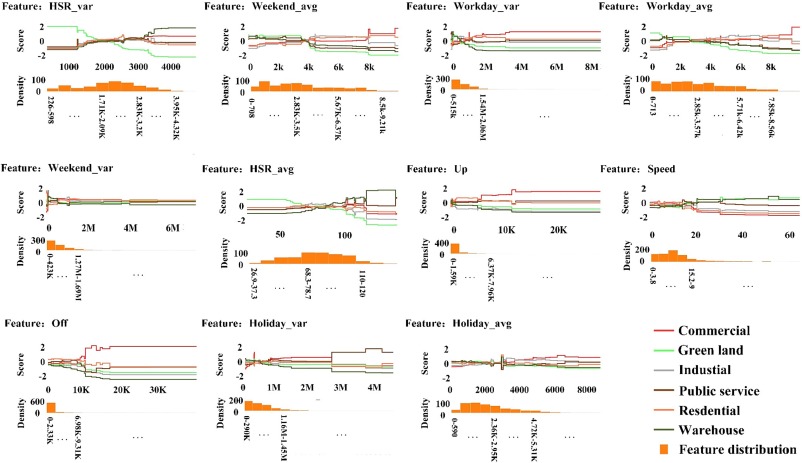

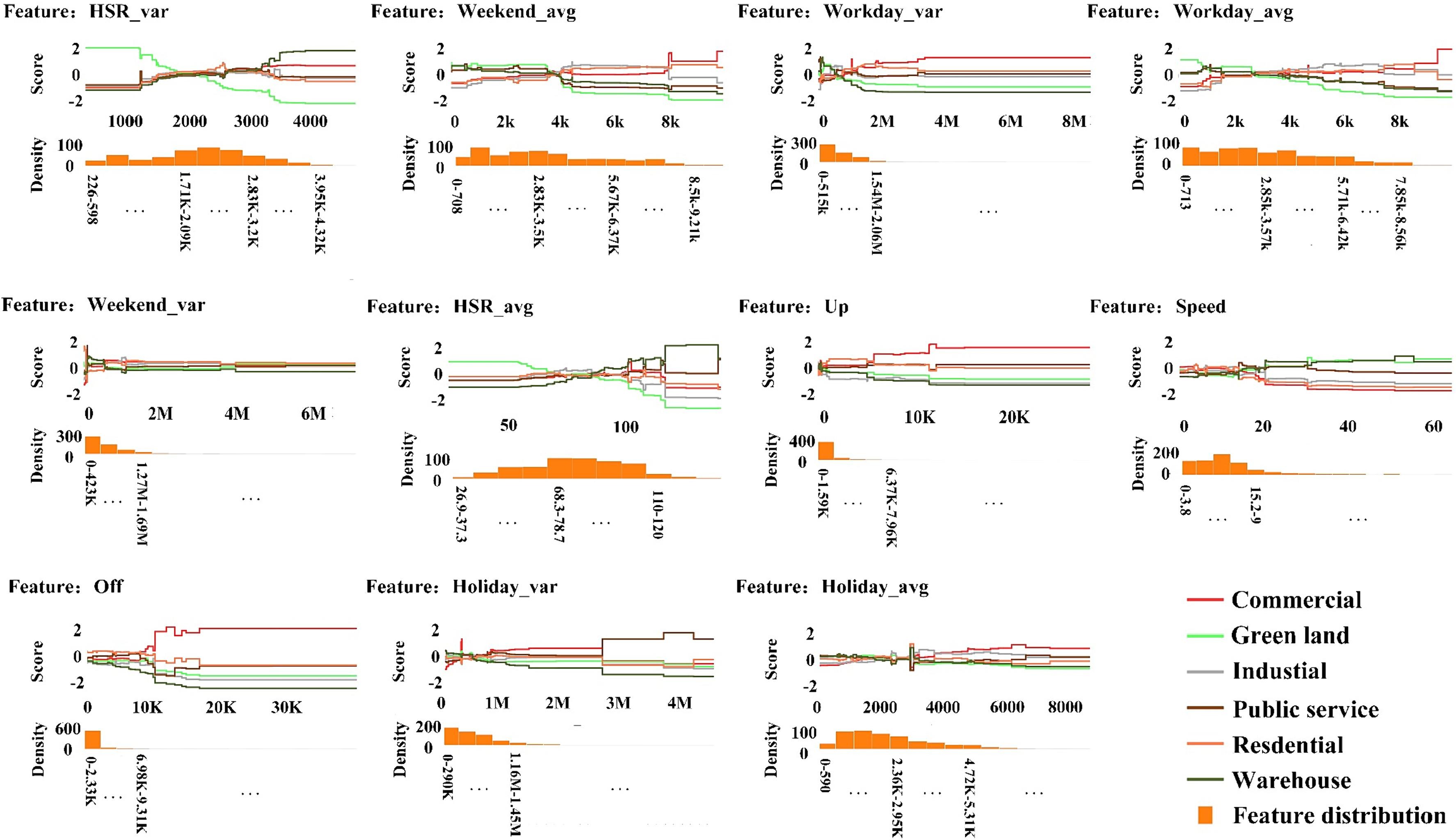

Urban land use patterns can be more accurately mapped by fusing multimodal data. However, many studies only consider socioeconomic and physical attributes within land parcels, neglecting spatial interaction and uncertainty caused by multimodal data. To address these issues, we constructed a multimodal data fusion model (MDFNet) to extract natural physical, socioeconomic, and spatial connectivity ancillary information from multimodal data. We also established an uncertainty analysis framework based on a generalized additive model and learnable weight module to explain data-driven uncertainty. Shenzhen was chosen as the demonstration area. The results demonstrated the effectiveness of the proposed method, with a test accuracy of 0.882 and a Kappa of 0.858. Uncertainty analysis indicated the contributions in overall task of 0.361, 0.308, and 0.232 for remote sensing, social sensing, and taxi trajectory data, respectively. The study also illuminates the collaborative mechanism of multimodal data in various land use categories, offering an accurate and interpretable method for mapping urban distribution patterns.

Keywords

Urban land use mapping;

Multimodal data fusion;

Uncertainty analysis;

Feature extraction;

Deep learning

Full Text Download

International Journal of Applied Earth Observation and Geoinformation

Q.E.D.